Simple PyTorch Project

Overview

This guide will walk you through a very simple PyTorch training pipeline.

Accompanying code for this article can be found here:

https://git.arts.ac.uk/ipavlov/WikiMisc/blob/main/SimpleCNN.ipynb

Loading Libraries

Every Python project starts by loading all the relevant libraries. In our case, the code for that is:

Reading the Dataset

For this example we will use the MNIST dataset, containing 70,000 images of handwritten digits from 0 to 9.

The specific version of MNIST used in this example can be found here:

https://www.kaggle.com/datasets/alexanderyyy/mnist-png

Download the dataset and unpack it in the same directory your jupyter notebook is located.

Unpacked dataset will consist of train and test folders containing images for model training and evaluation. Both test and train folders will have 10 sub-folders for each digit.

To train our model, we need to know the file names and labels of all images in the dataset. A simple way to do this is demonstrated in the code bellow:

Note: Different datasets will require different approaches.

Dataset Class

The Dataset class will provide necessary functionality to our training and evaluation pipeline, like loading images and labels, image transformations, and others.

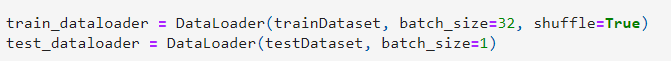

To allow for mini-batch use we need to introduce a DataLoader to our pipeline.

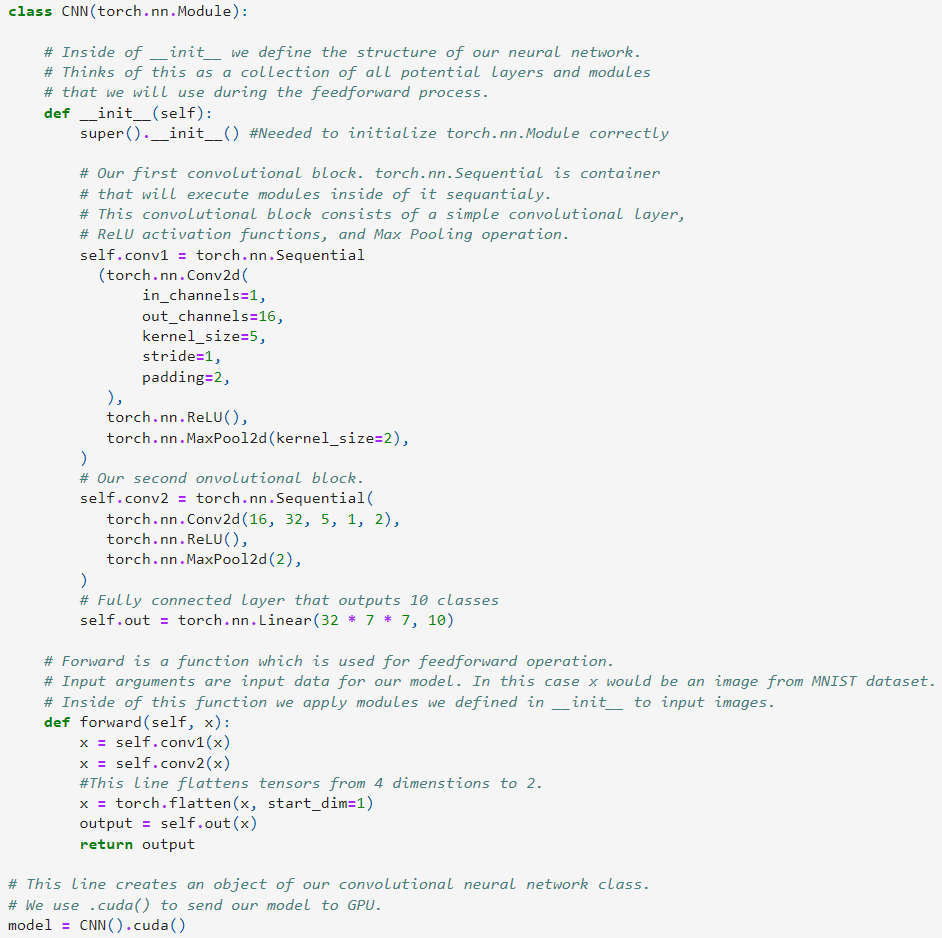

Model Class

Our model is classifying the input images between 10 different classes. Below is the code for our simple convolutional neural network. The comments in the code will provide additional explanation.

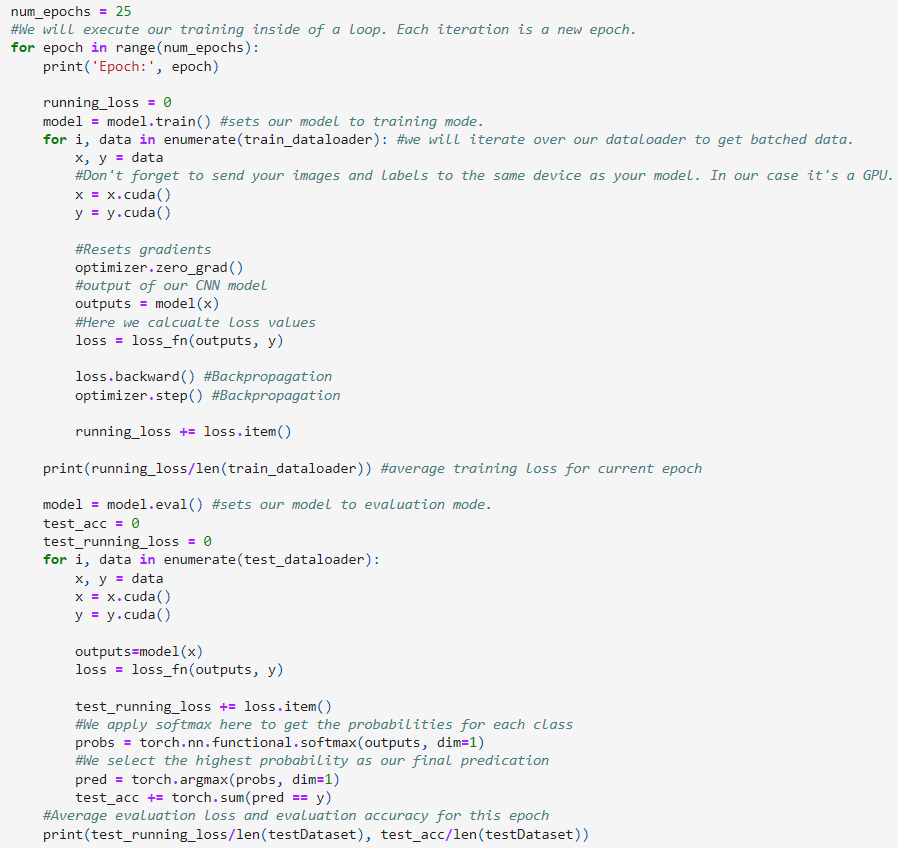

Training and Validation

Below is the code for our training and validation procedure.